12 minute read

In April of 2023 everything pivoted towards AI. The goals for the qualitative interviews were varied and there was a real sense of urgency. We wanted to better understand this rapidly changing landscape and evolving technology and learn more about how user behaviors and attitudes towards text-to-image (T2I) AI generators and Gen AI content had shifted.

Research plan

I conducted stakeholder interviews across engineering, product and design to gather concerns and questions. Then affinity mapped all the open questions and extracted themes from them in order to inform the research questions. I wrote a test plan and worked closely with other UX researchers in the beginning to create the screener and script. We wanted to understand how participants who had adopted T2I generators were using it in their workflows for commercial projects and in their stock search. In order to understand user concerns around AI I also screened for and spoke with participants who weren't familiar with T2I generators to unpack their concerns, if any for those who hadn't fully adopted it. There were also practical questions about where users would expect to find an entry point into or access an AI generator on the Adobe Stock website and when users might switch from traditional searching and filtering to prompting an AI, along with questions about the average number of times users prompted an AI before getting desired results.

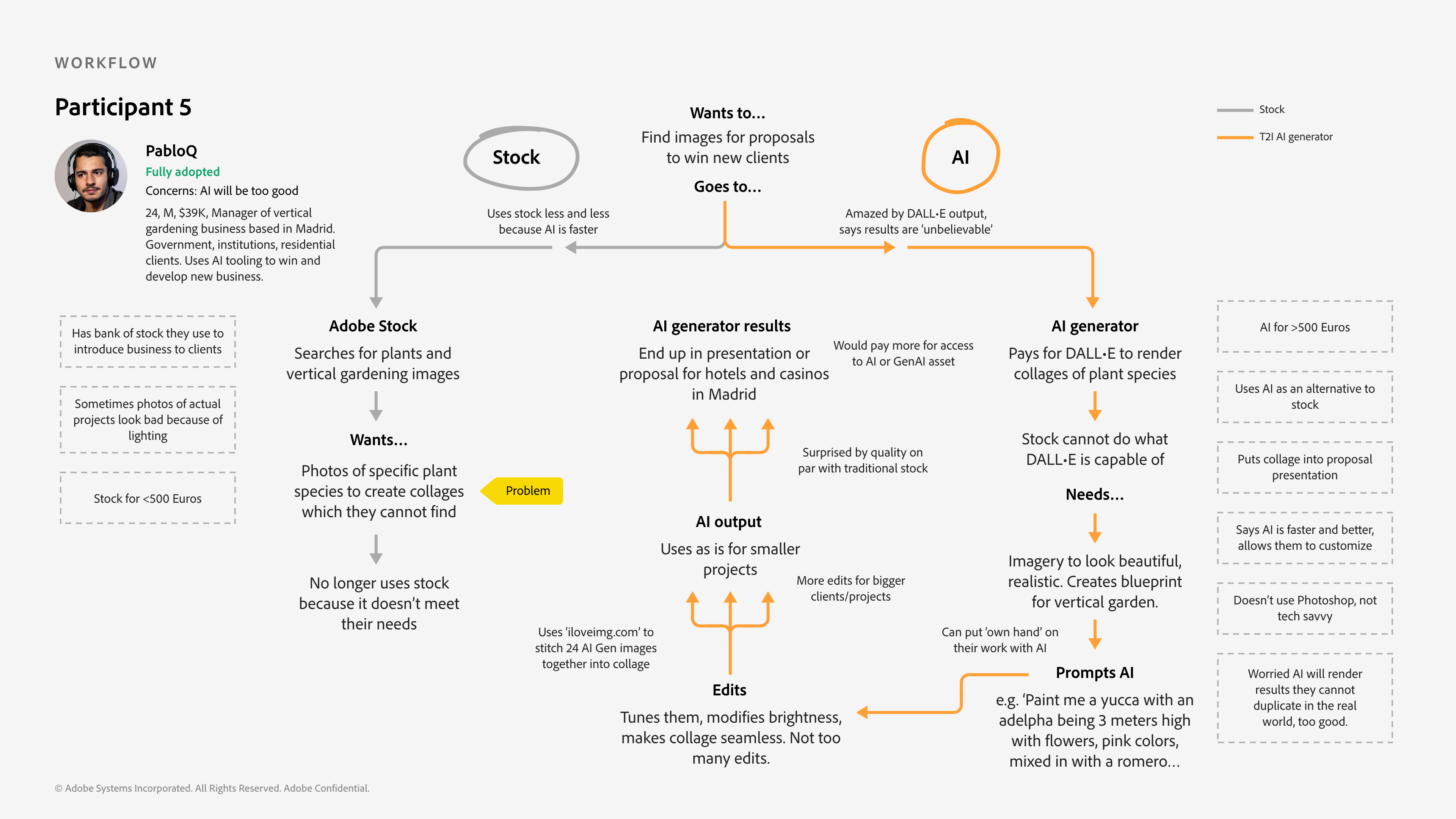

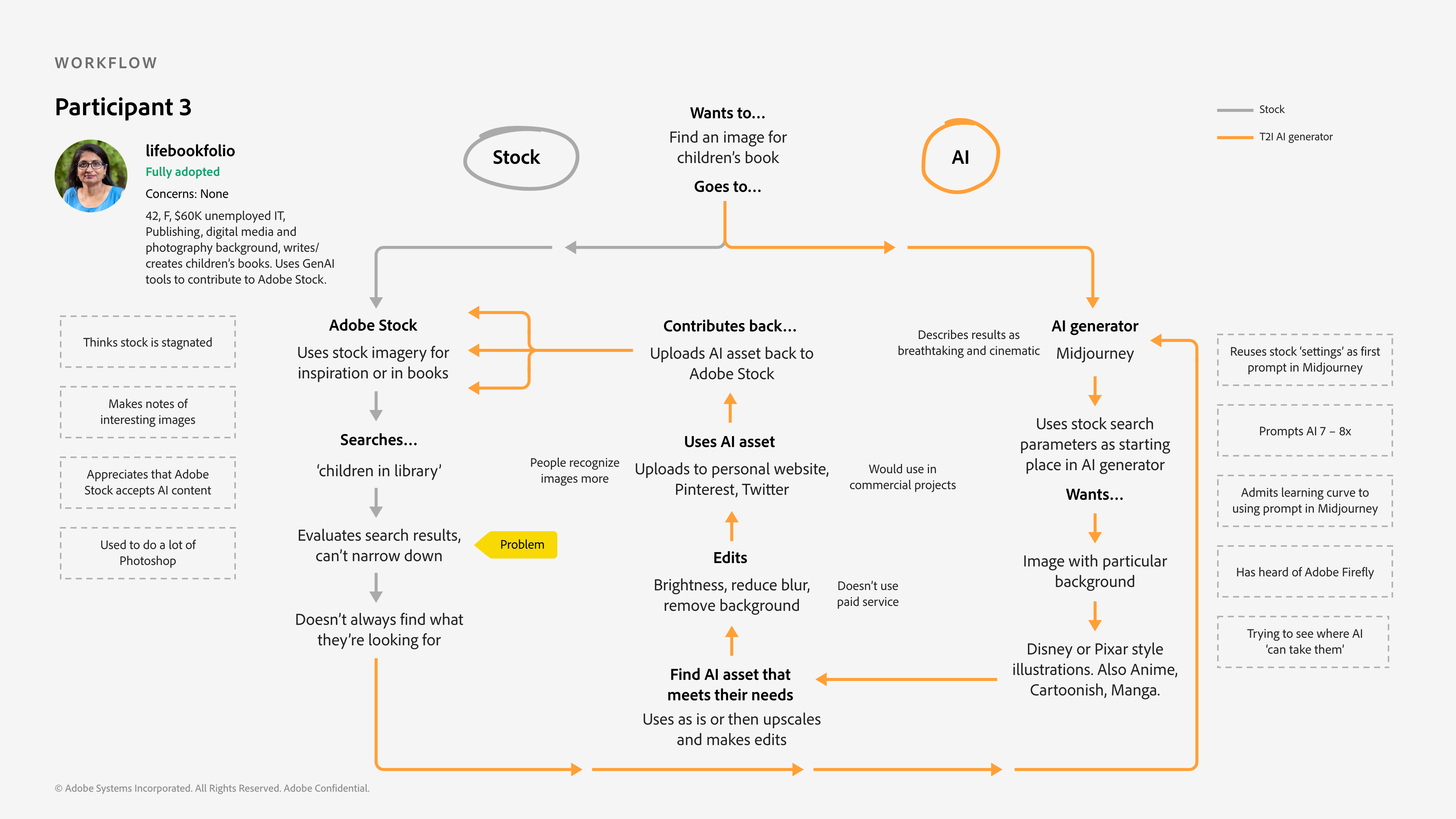

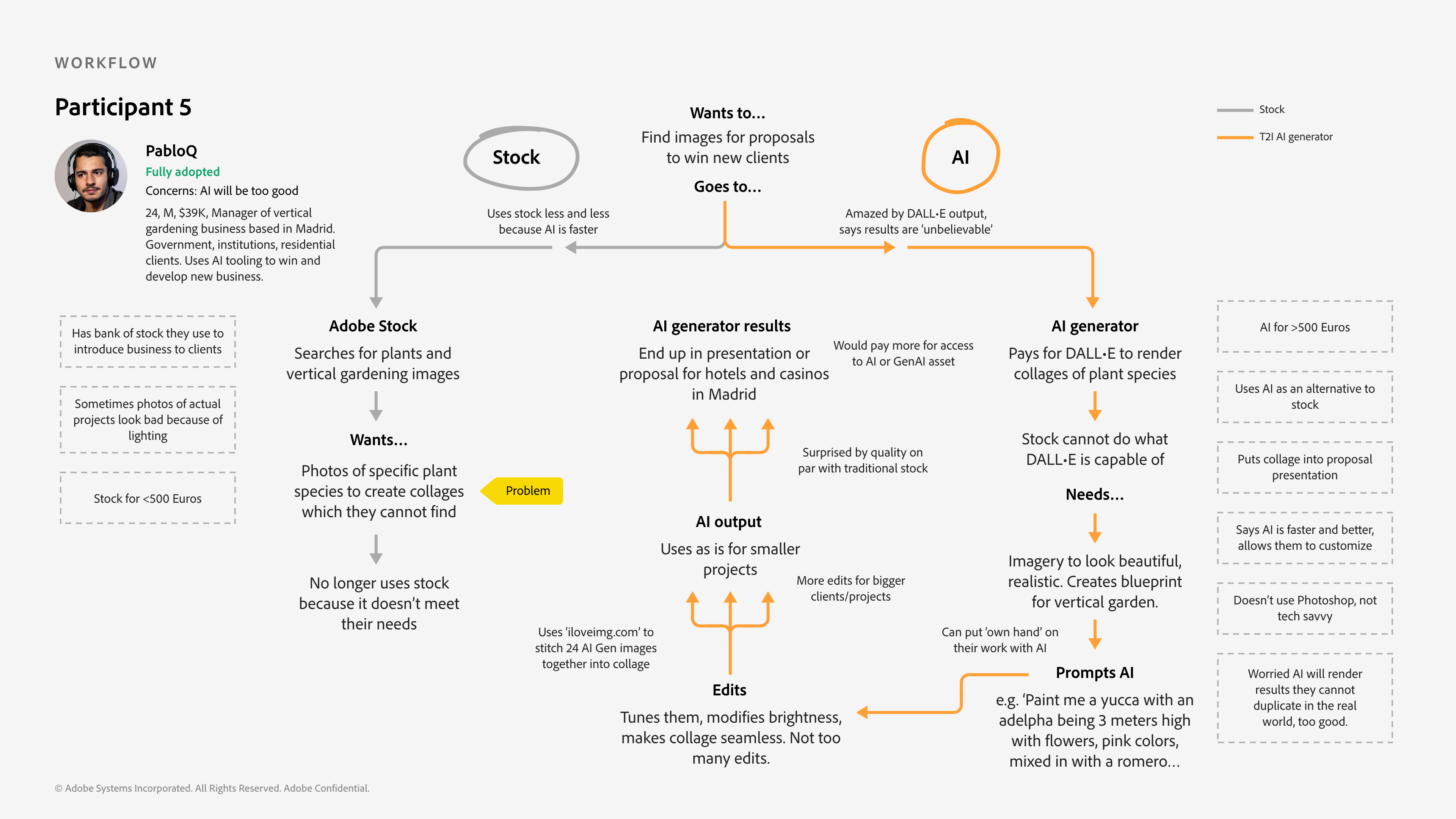

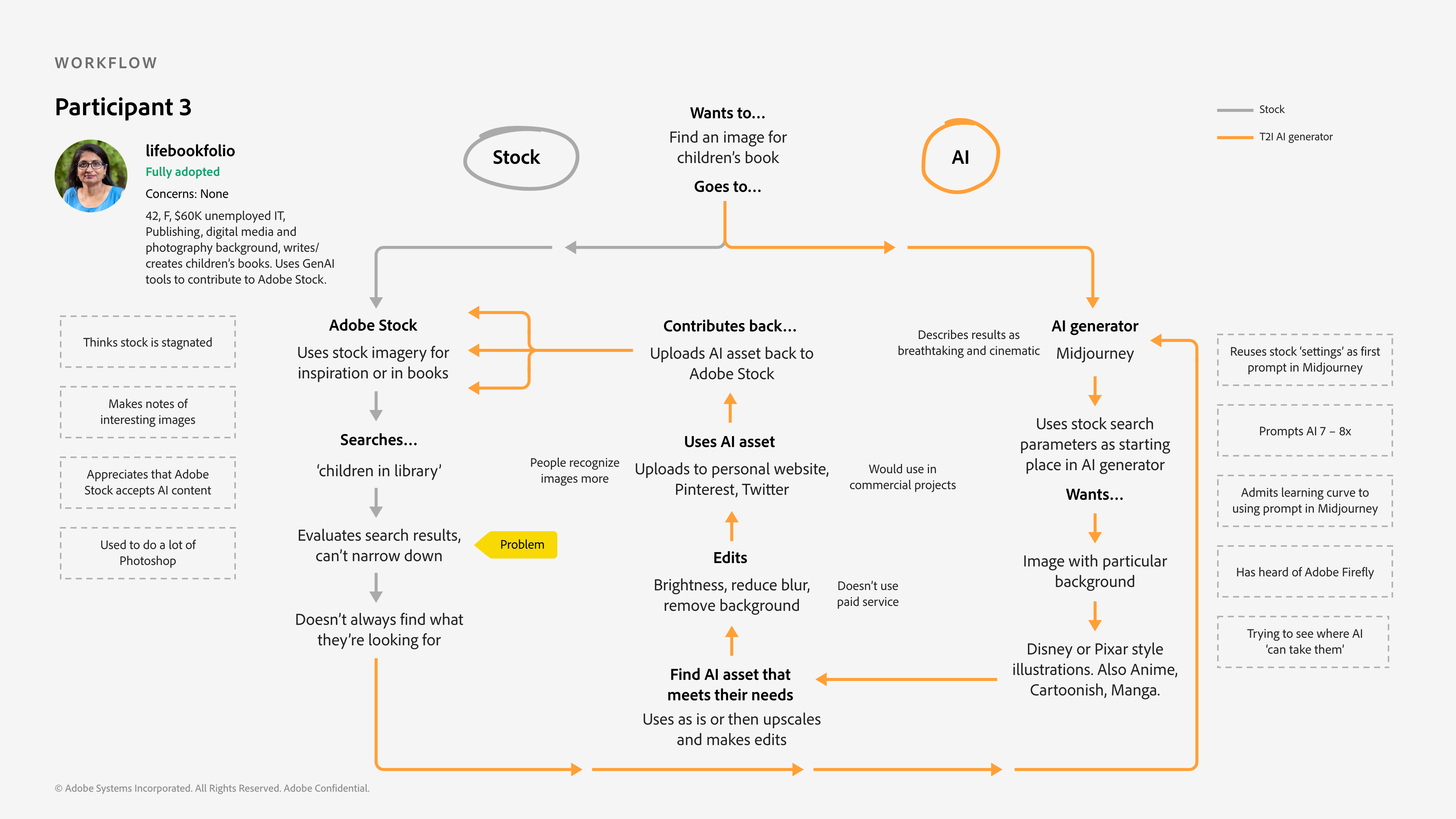

Journey map of participant's workflow. Shows sentiment and desired outcomes.

There were many open questions about the impact of this disruptive technology and how it would benefit stock users. A hypothesis had been created: 'Searching and filtering for stock was onerous and time consuming and doesn't always yield good or accurate results. Prompting an AI to render images would save time and could improve the quality of the results'. Part of the research was to validate this hypothesis and gut check the in progress work being done. Stakeholders were also curious what other AI tools were being used and whether participants were willing to use AI generated assets as is or made other edits and which AI styles and content types were being used most.

Some viewed AI results as premature or not completely ready. Others said they frequently saw unpredictable results and mentioned prompting was 'tricky' or required some luck. Participants also stated they were using AI generated images for inspiration or were more inclined to use Gen AI content on social media where the stakes were lower.

I scheduled and ran the sessions via Usertesting.com in about one week, then shared raw notes and takeaways with stakeholders and PMs working on the FireFly embed inside Adobe Stock. Later I annotated all of the videos, cut them into clips and created individual journey maps and a companion deck and then socialized the findings across the org and at the VP level. In July I presented an abbreviated version of the research at the Adobe Stock Product Summit to the rest of the org.

Findings

It was definitely more interesting talking to people who had familiarity with T2I AI generators and had adopted these new tools into their workflows. In most cases people were moving away from using stock or reused stock they had on hand to introduce new customers to their business. AI platforms like Midjourney and DALL•E 2 had started to gain more adoption and the new tools were supplanting traditional stock search methods because it was faster and provided more customized results in their mind.

Some participants were using stock as a starting point, then if they didn't find what they were looking for would move to an AI generator to try to recreate it. Others found the new AI tools superior to stock because they were able to prompt the AI in a very specific way making complex collages of imagery they could not find elsewhere.

Shows participant's workflow, sentiments and desired outcomes.

For participants who were less familiar with T2I AI generators their concerns were around quality, acknowledging the limitations of AI e.g. poorly rendered hands or distortions. Usage rights also remained a valid concern. Some viewed AI results as premature or not completely ready. Others said they frequently saw unpredictable results and mentioned prompting was 'tricky' or required some luck. Participants also stated they were using AI generated images for inspiration or were more inclined to use Gen AI content on social media where the stakes were lower.

Partipants prompted an AI on average 4 to 5 times (8x max) then made minor edits including adjusting brightness or contrast, retouching, remove or extend background, upscale/improve resolution, sharpen, remove/add object, change color or add text.

In the end I brought the stakeholders along as part of the process, gathered their feedback and input so they felt they had some skin in the game. I created a research deck with screener responses, video clips, journey maps and links back to the research plans, raw notes, early takeaways, and the Usertesting.com dashboard. I even printed the journey maps out tabloid sized and brought them to the vision workshop I facilitated in the following weeks.